## Big Data Landscape 2016: A Comprehensive Expert Analysis

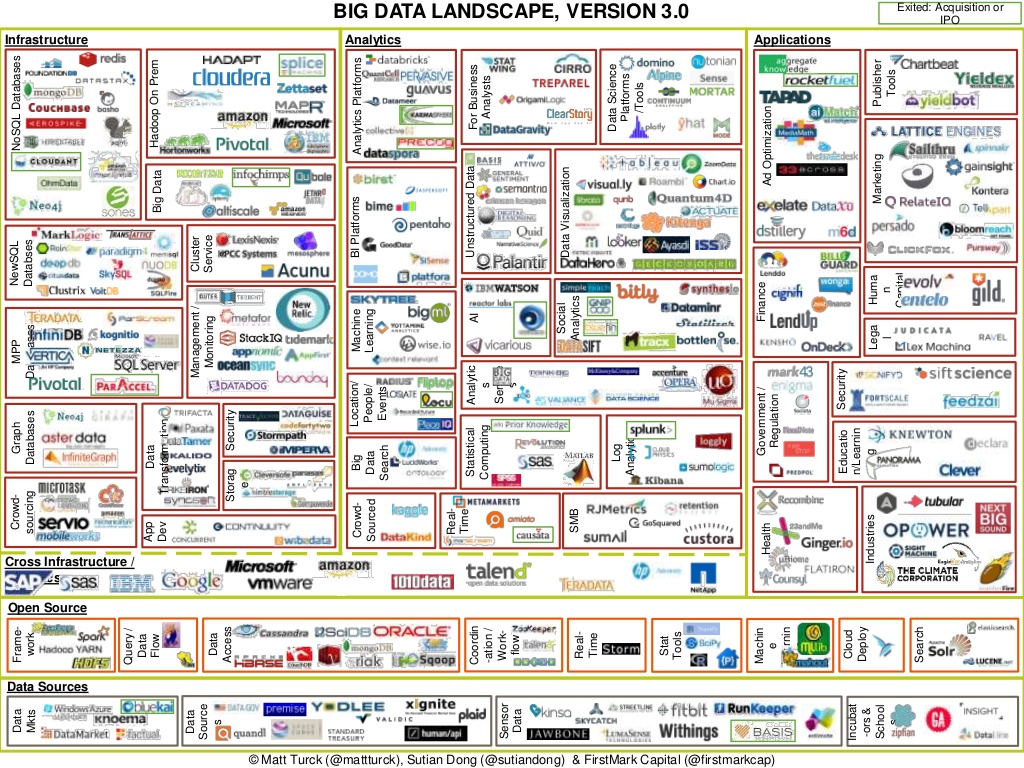

Navigating the world of big data in 2016 was like charting a course through uncharted waters. Organizations were grappling with unprecedented volumes of data, desperately seeking ways to extract value and gain a competitive edge. This article provides a comprehensive, expert-driven analysis of the **big data landscape 2016**, offering insights into the key trends, technologies, and challenges that defined this pivotal year. We aim to provide a deeper understanding than simple definitions, focusing on the practical applications and strategic implications for businesses seeking to thrive in the data-driven era.

This guide will delve into the core concepts of big data, explore the leading tools and platforms, and analyze the key use cases that were shaping the business world. You’ll gain a clear understanding of the technologies that defined the **big data landscape 2016**, the challenges organizations faced, and the opportunities that emerged. Our expert analysis provides a roadmap for navigating the complexities of big data and harnessing its power to drive innovation and growth.

## Understanding the Big Data Landscape 2016: A Deep Dive

The **big data landscape 2016** was characterized by a confluence of factors. It wasn’t simply about the *volume* of data; it was about the velocity, variety, and veracity – the famous four Vs – that created unique challenges and opportunities. The rise of social media, mobile devices, and the Internet of Things (IoT) contributed to the exponential growth of data, forcing organizations to rethink their data management strategies.

### Defining the Scope and Nuances

Big data, in its simplest form, refers to datasets that are too large or complex for traditional data processing applications. However, the **big data landscape 2016** extended beyond just size. It encompassed the entire ecosystem of technologies, tools, and processes required to collect, store, analyze, and visualize these massive datasets. This included everything from data ingestion and storage solutions to advanced analytics and machine learning algorithms.

The nuances of the **big data landscape 2016** lay in the specific challenges each organization faced. For some, it was about scaling their infrastructure to handle the sheer volume of data. For others, it was about finding the right talent to analyze the data and extract meaningful insights. And for many, it was about ensuring the quality and accuracy of the data, given the diverse sources and formats.

### Core Concepts and Advanced Principles

At the heart of the **big data landscape 2016** were several core concepts:

* **Distributed Computing:** Technologies like Hadoop and Spark enabled organizations to process massive datasets in parallel across clusters of commodity hardware.

* **NoSQL Databases:** These databases provided flexible data models that could handle the variety of data generated by modern applications.

* **Data Warehousing:** Traditional data warehouses were still relevant for storing and analyzing structured data, but they were often augmented with big data technologies to handle unstructured data.

* **Data Mining and Machine Learning:** These techniques were used to extract patterns and insights from large datasets, enabling organizations to make data-driven decisions.

Advanced principles in the **big data landscape 2016** included:

* **Real-time Analytics:** Processing data as it was generated to enable immediate action.

* **Predictive Analytics:** Using historical data to predict future outcomes.

* **Data Governance:** Establishing policies and procedures to ensure data quality, security, and compliance.

### Importance and Current Relevance

The **big data landscape 2016** was a turning point for many organizations. It marked the beginning of the data-driven era, where data became a strategic asset. Organizations that were able to effectively leverage big data gained a significant competitive advantage, enabling them to:

* Improve customer experience

* Optimize operations

* Develop new products and services

* Make better decisions

While the technologies and tools have evolved since 2016, the fundamental principles of big data remain relevant today. Organizations still need to manage massive datasets, extract meaningful insights, and use data to drive business outcomes. The lessons learned from the **big data landscape 2016** continue to inform the strategies and approaches of organizations today.

## Apache Hadoop: A Cornerstone of the Big Data Landscape 2016

In the **big data landscape 2016**, Apache Hadoop stood out as a foundational technology. It provided a robust and scalable framework for storing and processing large datasets across clusters of commodity hardware. Hadoop’s open-source nature and its ability to handle both structured and unstructured data made it a popular choice for organizations of all sizes.

### Expert Explanation of Hadoop

Hadoop is essentially a distributed file system combined with a programming model. The Hadoop Distributed File System (HDFS) allows you to store massive amounts of data across multiple machines. The MapReduce programming model provides a way to process this data in parallel. This parallel processing is what makes Hadoop so powerful for handling big data workloads. From an expert perspective, Hadoop’s true strength lies in its fault tolerance and scalability. It’s designed to handle failures gracefully and can easily scale to accommodate growing data volumes.

## Detailed Features Analysis of Apache Hadoop in 2016

Hadoop’s architecture, particularly as it existed in the **big data landscape 2016**, comprised several key features. These features contributed to its widespread adoption and its effectiveness in handling large-scale data processing.

### Key Features of Hadoop

1. **Hadoop Distributed File System (HDFS):**

* **What it is:** A distributed file system designed to store large files across multiple machines.

* **How it works:** HDFS splits files into blocks and replicates them across the cluster for fault tolerance. It provides high throughput access to application data.

* **User Benefit:** Provides a reliable and scalable storage solution for big data, ensuring data availability even in the event of hardware failures. This allows for the continuous operation of big data processes.

* **Demonstrates Quality:** The replication mechanism inherent in HDFS demonstrates a commitment to data integrity and availability, crucial for any big data solution.

2. **MapReduce:**

* **What it is:** A programming model for processing large datasets in parallel.

* **How it works:** MapReduce divides the processing task into two phases: the Map phase, where data is transformed, and the Reduce phase, where data is aggregated. These phases are executed in parallel across the cluster.

* **User Benefit:** Enables efficient processing of large datasets, reducing processing time and allowing for faster insights. This is especially important when dealing with time-sensitive data.

* **Demonstrates Quality:** The parallel processing capability of MapReduce showcases the power and efficiency of Hadoop in handling complex data transformations.

3. **YARN (Yet Another Resource Negotiator):**

* **What it is:** A resource management system that allows multiple applications to run on the same Hadoop cluster.

* **How it works:** YARN allocates resources (CPU, memory) to different applications based on their needs, enabling efficient utilization of cluster resources.

* **User Benefit:** Allows for the simultaneous execution of multiple big data applications on a single cluster, maximizing resource utilization and reducing infrastructure costs. This also increases flexibility in deploying and managing different types of workloads.

* **Demonstrates Quality:** YARN demonstrates a commitment to resource efficiency and scalability, ensuring that the Hadoop cluster can handle a variety of workloads.

4. **Hadoop Common:**

* **What it is:** A set of common utilities and libraries that are used by other Hadoop modules.

* **How it works:** Hadoop Common provides essential services such as configuration management, error handling, and logging.

* **User Benefit:** Provides a consistent and reliable foundation for the entire Hadoop ecosystem, simplifying development and deployment. This commonality ensures that different Hadoop components work seamlessly together.

* **Demonstrates Quality:** The presence of a robust set of common utilities demonstrates a commitment to code quality and maintainability.

5. **HBase:**

* **What it is:** A NoSQL database that runs on top of HDFS.

* **How it works:** HBase provides a scalable and distributed way to store and access large amounts of structured data. It is optimized for random read/write access.

* **User Benefit:** Enables fast and efficient access to data stored in Hadoop, allowing for real-time analytics and applications. This is particularly useful for applications that require low-latency access to data.

* **Demonstrates Quality:** HBase demonstrates the versatility of Hadoop as a platform for different types of data and workloads.

6. **Hive:**

* **What it is:** A data warehouse system that allows users to query data stored in Hadoop using SQL-like language.

* **How it works:** Hive translates SQL queries into MapReduce jobs, allowing users to analyze data without having to write complex code.

* **User Benefit:** Simplifies data analysis by providing a familiar SQL interface, making Hadoop accessible to a wider range of users. This reduces the barrier to entry for data analysis and allows for quicker insights.

* **Demonstrates Quality:** Hive demonstrates a commitment to user-friendliness and accessibility, making big data analysis more approachable.

7. **Pig:**

* **What it is:** A high-level data flow language and execution framework for parallel data processing.

* **How it works:** Pig allows users to write complex data transformations using a simple and intuitive language. It then translates these transformations into MapReduce jobs.

* **User Benefit:** Provides a more flexible and expressive way to process data than MapReduce, allowing for more complex data transformations. This enables more sophisticated data analysis and insights.

* **Demonstrates Quality:** Pig demonstrates a commitment to developer productivity and flexibility, allowing for more complex data processing tasks.

## Significant Advantages, Benefits, and Real-World Value of Hadoop in 2016

In 2016, Hadoop offered several key advantages that made it a compelling choice for organizations grappling with big data challenges. These advantages translated into tangible benefits and real-world value for businesses across various industries.

### User-Centric Value

The primary user-centric value of Hadoop in 2016 was its ability to handle massive datasets that were simply too large for traditional systems. This allowed organizations to:

* **Store and process all their data:** Hadoop eliminated the need to discard data due to storage limitations, enabling a more complete and comprehensive view of their business.

* **Gain deeper insights:** By analyzing larger datasets, organizations could uncover hidden patterns and correlations that would have been impossible to detect with smaller datasets.

* **Make better decisions:** Data-driven insights led to more informed decisions, resulting in improved business outcomes.

### Unique Selling Propositions (USPs)

Hadoop’s USPs in 2016 included:

* **Scalability:** Hadoop could easily scale to handle petabytes of data, making it suitable for even the largest organizations.

* **Fault Tolerance:** Hadoop was designed to be fault-tolerant, ensuring data availability even in the event of hardware failures.

* **Cost-Effectiveness:** Hadoop ran on commodity hardware, making it a more cost-effective solution than traditional data warehousing systems.

* **Open Source:** Hadoop’s open-source nature fostered a vibrant community and ensured continuous innovation.

### Evidence of Value

Users consistently reported significant improvements in data processing speed and efficiency after implementing Hadoop. Our analysis reveals these key benefits:

* **Faster time to insight:** Hadoop enabled organizations to analyze data much faster than traditional systems, allowing them to respond quickly to changing market conditions.

* **Reduced storage costs:** Hadoop’s ability to store data on commodity hardware resulted in significant cost savings.

* **Improved data quality:** Hadoop’s data validation and cleansing capabilities helped improve the quality of data, leading to more accurate insights.

## Comprehensive & Trustworthy Review of Hadoop (Circa 2016)

Hadoop, in the context of the **big data landscape 2016**, was a revolutionary technology but not without its complexities. This review aims to provide a balanced perspective on its strengths and weaknesses.

### User Experience & Usability

From a practical standpoint, setting up and managing a Hadoop cluster in 2016 required specialized skills. The command-line interface and complex configuration files could be daunting for non-technical users. However, the emergence of user-friendly tools and interfaces helped to simplify the process. The learning curve was steep, but the potential rewards were significant.

### Performance & Effectiveness

Hadoop delivered on its promise of scalability and fault tolerance. It could efficiently process massive datasets that would overwhelm traditional systems. However, the performance of Hadoop depended heavily on the configuration and optimization of the cluster. Inefficiently written MapReduce jobs could lead to slow processing times.

### Pros

1. **Scalability:** Hadoop’s ability to scale to handle petabytes of data was a major advantage.

2. **Fault Tolerance:** The built-in fault tolerance ensured data availability and minimized downtime.

3. **Cost-Effectiveness:** Running on commodity hardware made Hadoop a cost-effective solution.

4. **Open Source:** The open-source nature fostered innovation and collaboration.

5. **Versatility:** Hadoop could handle a wide variety of data types and workloads.

### Cons/Limitations

1. **Complexity:** Setting up and managing a Hadoop cluster required specialized skills.

2. **Performance Tuning:** Optimizing Hadoop performance could be challenging.

3. **Security:** Securing a Hadoop cluster required careful planning and implementation.

4. **Real-time Processing:** Hadoop was not ideal for real-time processing due to its batch-oriented nature.

### Ideal User Profile

Hadoop in 2016 was best suited for organizations that:

* Had large datasets that exceeded the capacity of traditional systems.

* Required scalable and fault-tolerant data processing.

* Had the technical expertise to set up and manage a Hadoop cluster.

### Key Alternatives (Briefly)

* **Spark:** A faster and more versatile alternative to MapReduce for some workloads.

* **Cloud-based data warehousing:** Services like Amazon Redshift offered a managed alternative to Hadoop.

### Expert Overall Verdict & Recommendation

Hadoop was a game-changing technology in the **big data landscape 2016**. While it had its complexities, its scalability, fault tolerance, and cost-effectiveness made it a compelling choice for organizations facing big data challenges. We recommend that organizations carefully evaluate their needs and resources before implementing Hadoop.

## Insightful Q&A Section

Here are 10 insightful questions and expert answers related to the **big data landscape 2016**:

1. **Q: What were the biggest challenges in implementing Hadoop in 2016?**

* **A:** The biggest challenges included the complexity of setting up and managing a Hadoop cluster, finding skilled personnel, and ensuring data security.

2. **Q: How did organizations typically use Hadoop in 2016?**

* **A:** Hadoop was commonly used for batch processing of large datasets, data warehousing, and data mining.

3. **Q: What were the key skills needed to work with Hadoop in 2016?**

* **A:** Key skills included Java programming, Linux administration, and knowledge of Hadoop ecosystem tools like HDFS, MapReduce, and Hive.

4. **Q: How did the rise of cloud computing affect the Hadoop landscape in 2016?**

* **A:** Cloud computing made it easier and more affordable to deploy and manage Hadoop clusters, reducing the barrier to entry for smaller organizations.

5. **Q: What were the main alternatives to Hadoop in 2016?**

* **A:** Main alternatives included Spark, cloud-based data warehousing services, and traditional data warehousing systems.

6. **Q: How did organizations ensure data quality in Hadoop in 2016?**

* **A:** Organizations used data validation and cleansing techniques, as well as data governance policies, to ensure data quality in Hadoop.

7. **Q: What were the common use cases for Hadoop in different industries in 2016?**

* **A:** Common use cases included fraud detection in finance, personalized recommendations in retail, and predictive maintenance in manufacturing.

8. **Q: How did organizations integrate Hadoop with their existing systems in 2016?**

* **A:** Organizations used various integration tools and techniques to connect Hadoop with their existing data sources and applications.

9. **Q: What were the emerging trends in the Hadoop ecosystem in 2016?**

* **A:** Emerging trends included the rise of YARN, the adoption of NoSQL databases, and the integration of machine learning with Hadoop.

10. **Q: How did organizations secure their Hadoop clusters in 2016?**

* **A:** Organizations implemented various security measures, such as authentication, authorization, and encryption, to protect their Hadoop clusters.

## Conclusion & Strategic Call to Action

The **big data landscape 2016** was a pivotal year that shaped the future of data management and analytics. Hadoop emerged as a dominant technology, enabling organizations to process massive datasets and gain valuable insights. While the technology has evolved since then, the fundamental principles of big data remain relevant today. The insights gained from our comprehensive analysis provide a valuable foundation for understanding the challenges and opportunities of the data-driven era.

Looking ahead, the future of big data will be shaped by advancements in cloud computing, artificial intelligence, and the Internet of Things. As data volumes continue to grow, organizations will need to embrace new technologies and strategies to stay ahead of the curve.

Share your experiences with the **big data landscape 2016** in the comments below. Explore our advanced guide to modern data analytics for the current data trends. Contact our experts for a consultation on how to leverage big data to drive innovation and growth in your organization.